As32934

Facebook FNA updates (2024) and their IP backbone analysis

Facebook FNA updates - April 2021

Over last couple of years I posted updates on Facebook caching nodes (FNA) deployment across the world. If you would like to read the logic I am using to pull the data, you can check the original post here. While the data is about Facebook FNA, it’s highly likely that networks would have Google GGC nodes alongside (a bit less) Akamai caches.

My last post about it was back in Nov 2019 and it seems just about the time to do a fresh check. So here we go…

Facebook FNA node update

In March 2018 I mapped nodes of Facebook globally using the airport string they use in the CDN URLs (detailed post here). Since then I posted a couple of times updates on as they are adding more nodes. There also have been questions via emails and comments on the blog in recent times about updated data.

Here goes latest data as on 22 Nov 2019. It’s published here.

Global stats

-

Total nodes increased from 2204 as from Aug 2018 to 3184 now in Nov 2019.

Facebook FNA Nodes Updates

Earlier this year after APRICOT 2018, I posted a list of visible Facebook FNA (CDN caching) nodes across the world with IPv4, IPv6 and the AS name. I got quite a few mails in following months about people mentioning that they installed nodes but do not see their names in the list (and that was normal since list was static).

I re-ran my script to see emailslatest status of nodes. During last check I saw 1689 nodes (3rd March). Now on 26th Aug i.e after close to 6 months, the total number of nodes has increased to 2204.

Mapping Facebook's FNA (CDN) nodes across the world!

Just back from APRICOT 2018. As I mentioned in my previous blog post, APNIC had its first Hackathon and it was fun (blog post of APNIC here). There was one project on the ranking of CDNs using RIPE Atlas data. To achieve this team was trying to find strings/hostnames which they can trace to and figure out nearby CDN. As part of that, I suggested them to look into www.facebook.com and carefully noting the sources from where elements get loaded. It’s quite common that Facebook.com (or Google.com for the logic) would be hosted on some server at a large PoP while FNA (or GGC) would serve only specific static content out of it. FNA, of course, sits on the IPs of the ISP hosting it. So in the source list, we found scontent.fktm1-1.fna.fbcdn.net and that gives an idea that FNA strings are around logic: scontent.fxxx1-1.fna.fbcdn.net where xxx is the airport code. 1-1 means 1st PoP in 1st ISP over there probably (strong guess!). If there are more FNA nodes in a given area, the number goes further up. The team used it and for now, the project is over. But while I was on the way back to India, I thought that this is very interesting data if we pull the full picture by querying all possible IATA airport codes with a logic. This logic can be used for two things:

Welcome Facebook (AS32934) to India!

Today I was having a chat with my friend Hari Haran. He mentioned that Facebook has started its PoP in Mumbai. This seems true and Facebook has mentioned GPX Mumbai as their private peering PoP in their peeringdb record.

I triggered a quick test trace to “www.facebook.com” on IPv4 from all Indian RIPE Atlas probes and resolved “www.facebook.com” on the probe itself. The lowest latency is from Airtel Karnataka and that’s still hitting Facebook in Singapore. I do not see any of networks with probe coverage hitting Facebook node locally.

DNS hack of Google, Facebook more sites in .bd

Yesterday Google’s Bangladeshi website google.com.bd was hacked and this happened via DNS. It was reported on the bdNOG mailing list at morning in a thread started by Mr Omar Ali.

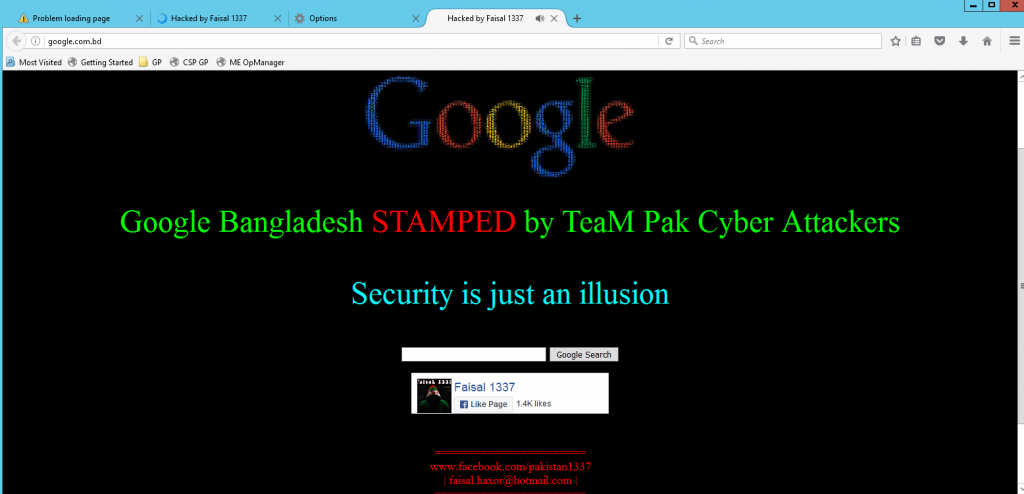

This clearly shows how authoritative DNS for “com.bd.” (which is same as bd. btw) was poisoned and was reflecting attackers authoritative DNS. Later Mr Farhad Ahmed posted a screenshot of google.com.bd showing hackers page:

Later Mr Sumon Ahmed mentioned that it happened because web frontend of .bd was compromised. This was an interesting hijack as attacker attacked the key infrastructure of the registry instead of Google or Facebook servers. It’s also a warm reminder of the way DNS depends on the hierarchal structure by design and at this stage, we need to focus on DNSSEC to add on the security to the current system. Lately .bd domain faced issues multiple time this year. I hope it will have a good stable time in the upcoming year. In terms of stability it is being backed by PCH anycast infrastructure but PCH’s DNS servers are just published in NS records of it’s existing auth servers, but not on the parent zone (which is root zone). Thus the point of failure remains and is yet to be fixed.

Being Open How Facebook Got Its Edge

An excellent presentation by James Quinn from Facebook on “Being Open How Facebook Got Its Edge” at NANOG68. YouTube link here and video is embedded in the post below.

Some key points mentioned by James:

- BGP routing is inefficient as scale grows especially around distributing traffic. They can get a lot of traffic concentrated to a specific PoP apart from the fact that BGP best AS_PATH can simply be an inefficient low AS_PATH based path.

- Facebook comes with a cool idea of “evolving beyond BGP with BGP” where they use BGP concepts to beat some of the BGP-related problems.

- He also points to fact that IPv6 has much larger address space and huge summarization can result in egress problems for them. A single route announcement can just have almost entire network behind it!

- Traffic management is based on local and a global controller. Local controller picks efficient routes, injects them via BGP and takes care of traffic balancing within a given PoP/city, balancing traffic across local circuits. On the other hand, Global PoP is based on DNS logic and helps in moving traffic across cities.

It’s wonderful to see that Facebook is solving the performance and load related challenges using fundamental blocks like BGP (local controller) and DNS (global controller). :)