MySQL replication status monitoring

Background

I am running my own authoritative DNS servers for the last few years. In earlier stages I just used registrar-provided DNS, later moved to “Cloud provider” provided DNS and ultimately settled for running my own auth DNS.

Two major requirements pushed me to self-host auth DNS:

- Requirement of REST API for DNS needed by the web servers to resolve Letsencrypt certbot DNS-based challenge. This allows me to have internally hosted tools with Letsencrypt issues TLS certificates instead of self-signed ones. The API access is mostly missing in the registrars hosted DNS.

- Occasional DDoS on my blog. There have been occasional DDoS on my blog (probably from random people who enjoy doing volumetric attacks). This always worried me about DNS bills during DDoS, especially for low TTL records. The last hosted DNS option I had over a year ago was Google Cloud DNS and they charge $0.40 per million queries per month. This can add a significant amount to the bill if under massive DDoS resulting in many millions of DNS queries. Plus per zone 20 cents charge gets expensive at a scale with a half a dozen domains.

After exploring a few options I settled for running PowerDNS with MySQL backend. This is kind of a comfort zone since I ran similar systems for my employers in past and it worked well. PowerDNS is a great option for authoritative DNS as it has nice documented REST API, CLI utility pdnsutil for easy high-level scripting, supports a bunch of backends to store DNS records from BIND like text files to MySQL. It is also good (automated) support to handle DNSSEC for signing the zone.

Replicating DNS records & checks

I rely on MySQL replication instead of DNS-based AXFR zone transfers. It’s much faster and more reliable. However, MySQL replication does not come with an in-built fail check. One can write some custom script to check and trigger alerts but I am against the idea of script triggering alerts because it doesn’t scale well. What if the script fails, what if some dependency fails over time as one goes through cycles of upgrades for MySQL, python or even just the OS? I wanted to improve the methods I use to check for the status of replication.

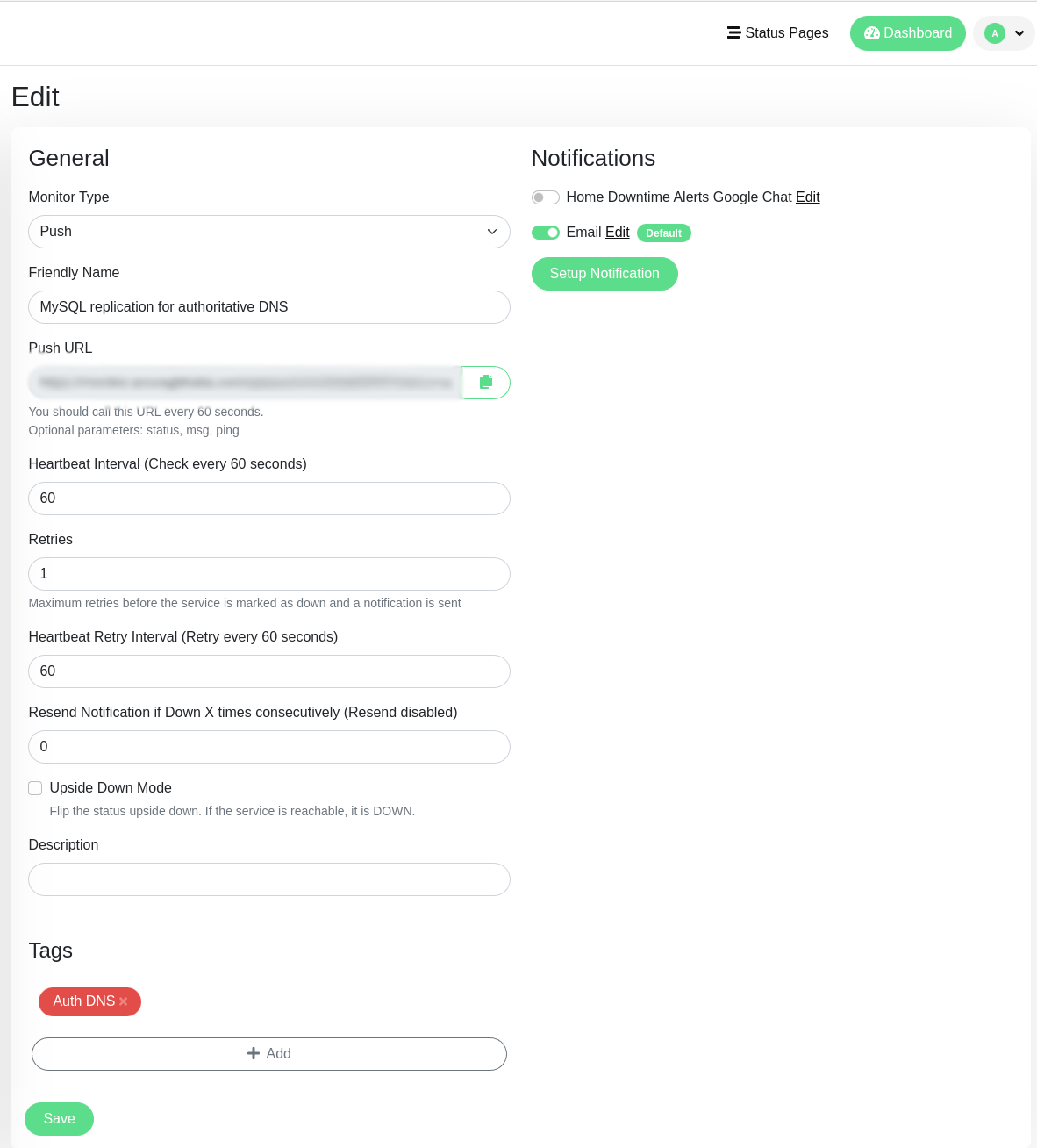

These days many alerting software comes with “push” based alerts. Here the logic is to get any customer script/app/code running “somewhere” far off, behind CGNAT, behind a firewall etc and call a public URL with a specific key. Checks can be based on that call like “alert if URL is not called in last 5mins or alert if URL is called etc”. Check “push-based alerts” in Uptime-Kuma.

mysql>

mysql> show slave hosts;

+-----------+------+------+-----------+--------------------------------------+

| Server_id | Host | Port | Master_id | Slave_UUID |

+-----------+------+------+-----------+--------------------------------------+

| 3 | | 3306 | 1 | 1faaa105-e52b-11ed-9913-0242ac190003 |

| 5 | | 3306 | 1 | f436b26b-e526-11ed-9955-0242ac120003 |

| 4 | | 3306 | 1 | 4eb7d236-e500-11ed-9a7e-0242ac120002 |

| 2 | | 3306 | 1 | 3e48a37c-e536-11ed-9987-0242ac120002 |

+-----------+------+------+-----------+--------------------------------------+

4 rows in set, 1 warning (0.01 sec)

mysql>

This shows all the servers with different server IDs. I haven’t defined hostnames but since unique server IDs exists, these can be checked via this Python script:

import mysql.connector

import os

import requests

# Get the MySQL connection details from environment variables

config = {

"user": os.getenv("MYSQL_USER"),

"password": os.getenv("MYSQL_PASSWORD"),

"host": os.getenv("MYSQL_HOST"),

"port": os.getenv("MYSQL_PORT"),

}

# Get the uptime token from environment variables

uptime_token = os.getenv("UPTIME_TOKEN")

# Connect to the MySQL server

conn = mysql.connector.connect(**config)

# Issue the "show slave hosts" command

cursor = conn.cursor()

cursor.execute("SHOW SLAVE HOSTS;")

results = cursor.fetchall()

# Check if server_ids 2,3,4, and 5 exist in the reply

server_ids = [2, 3, 4, 5]

if all(id in [result[0] for result in results] for id in server_ids):

# If the condition is met, call a URL

url = f"https://monitor.uptime-kuma-domain/api/push/{uptime_token}?status=up&msg=OK&ping="

response = requests.get(url)

print("URL called with status code:", response.status_code)

print("Replication up")

else:

# If the condition is not met, do nothing

print("Replication down, not calling the URL")

# Close the MySQL connection

conn.close()

Next, this can be called using crontab as:

crontab:

* * * * * /usr/local/bin/python3 /app/script.py >> /var/log/cron.log 2>&1

And both these can be packed inside a Docker container using Python as the base image:

FROM python:3.9-slim-buster

COPY script.py /app/script.py

RUN pip install mysql-connector requests

RUN apt update && apt install -y cron && apt clean

WORKDIR /app

# Copy the cronjob file to the container

COPY cronjob /etc/cron.d/mysql-replication-cron

# Give execution rights on the cronjob file

RUN chmod 0644 /etc/cron.d/mysql-replication-cron

# Apply the cronjob

RUN crontab /etc/cron.d/mysql-replication-cron

# Start the cron service in the background

CMD ["/bin/bash", "-c", "printenv > /etc/environment && cron -f"]

Now this container can be built & provided with the following environment variables:

MYSQL_HOST

MYSQL_PORT

MYSQL_USER

MYSQL_PASSWORD

UPTIME_TOKEN ""

This method can be deployed to monitor working of any custom script, any checks etc in a REST API based centralised system.