Large scale optical switching at Google - AS15169

It’s the day of Diwali. Happy Diwali 🪔 to everyone reading this post. 😀

I am in holiday mode from couple of days and mostly reading (and binge watching). While reading paper on Apollo - Google’s optical circuit switching, I looked around and came across a fascinating Google’s talk from last year. This talk covers how they made their own optical switches.

Quick notes

(Advanced warning: These are from eyes of network engineer working mostly at IP/ethernet layer with poor idea about optical layer)

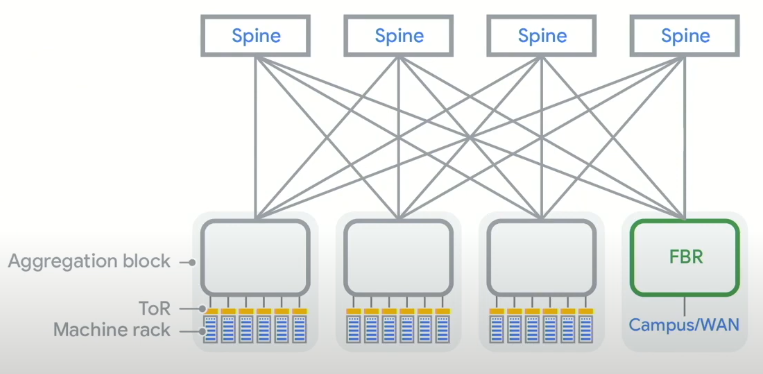

Architecture design

Google’s Jupiter datacenter architecture had typical Spine, aggregation and TOR switches. The idea here is to have big fat switches on top forming the “spine”. Next, aggregation switches, which are meshed with spine switches and these aggregation switches serve top of the rack (TOR) switches where servers connect. This helps to aggregate all servers in a given rack within the ToR switch(es) within that rack. Because of the mesh between aggregation & spine, the actual connections here are very high. Around 8 years ago, Google started replacing these Spine switches with optical switches. This essentially gives them a way to mesh all switches within the aggregation layer and control that optical connectivity from software. If you are watching the above embedded video from YouTube, those bits are coming from one of these optically switched systems.

Optical Switches

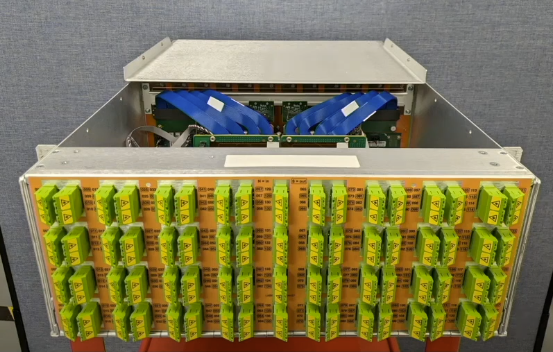

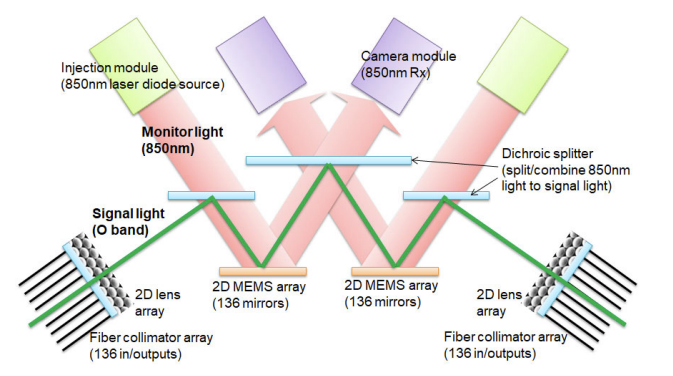

(Image source here)

Oftentimes in the IP world, to actually call an Ethernet switch with SFP optical ports an “optical switch”, but this is a different case. Imagine having a “hardware” where 100s of fibres land, say from Input 1, Input 2,… Input 100 and next 100s of fibre going out - Output 1, output 2…output 100. Next, this hardware can optically route a given input (say, e.g input 1) to any given output (e.g output 10). To do this, there are a few methods. Google’s one is based on MEMS mirrors. These are tiny (less than 1mm) mirror arrays which can steer a given optical input at a given angle to a pre-defined path. They do this within milli-second range which is crazy fast.

(Image source here)

East-West traffic is huge

Google started deploying this 7 years ago, and all their datacenters are using it. A considerable part of it comes from modern workloads as datacenters have a lot of East-West traffic (within DC) these days, compared to North-South (outside of DC). Google Cloud largely defines Google’s network decisions these days, and besides internal traffic for analytics, logs, backups, replications, there would be a major traffic between GPUs, compute, storage, etc. Thus, they need a mesh of aggregation switches to ensure traffic goes directly between them instead of another layer on top, which would get quite hot and become the congestion point. To deal with that, they need to connect big fat switches with lots of ports in a mesh. It’s quite interesting how, back in 2015-2016, when traffic was shooting up due to streaming, a lot of them ended up going to / aggregating on CDNs. Now, in the next bandwidth jump, it’s all local traffic to AI-based workloads and not even hitting internet but staying mostly within a DC.