Building CDN around Backblaze B2

Last year I posted about self-hosting my website with high availability. Over time I have tweaked a few things to remove certain performance bottlenecks. One of them is static content delivery. Initially, I was storing both websites as well as the static content of the website (served via cdn.anuragbhatia.com) at each edge location. They were logically seperated but sitting at the same location.The website (excluding the static content) is tiny (60MB as of now) but static content is a little high. It not only has photos, and videos embedded in the website but also things like screenshare videos, occasional temporary sharing of files etc. Since these are all hosted on cheap low-end VMs with limited storage, the total storage size was slowly becoming a concern.

Thus over the last week, I migrated the cdn.anuragbhatia.com from the garage cluster with the origin sitting locally into a CDN doing edge caching only while moving off origin to Backblaze B2.

There are several advantages to this design compared to older models or even just having a public Backblaze B2 or any other object storage bucket:

- Storage overall is cheap when done by object storage players. Backblaze B2 costs $0.006/GB/month or 0.51 INR/GB/month which is cheaper than getting a big fat VM with larger storage. Storage is largely a commodity & cheaper when done at scale.

- I maintain my own URLs (over my own domain) while serving content out of Backblaze B2. That way I am not locked to their URLs and can migrate off if needed in future without having to change URLs.

- While bandwidth overall is cheap at B2 - $0.01/GB/month / 0.86 INR/GB/month I feel worried about putting larger files. Imagine a 1GB screen record video embedded on a website directly from B2. If someone scripts a loop for say 100k pulls, it would cost $1000 which isn’t an insignificant amount. There were ways to cap overall spending but still, the internet is full of bill shocks across various cloud players. By having my own cache frontend, files are cached and served from VM storage. It saves from possible bill shock. I can even configure the speed limit per source IP.

- Local caching gives better performance. Cache VMs are sitting in Germany, India and the US. For India and the US, there is significantly better performance for cached traffic.

- Backblaze follows the usual 17+3 architecture (or 16+4 / 15+5 in some cases) where they can lose up to 3 servers out of 20 servers and still recover content. This gives basic redundancy instead of doing 3x storage which I was doing earlier.

How it works?

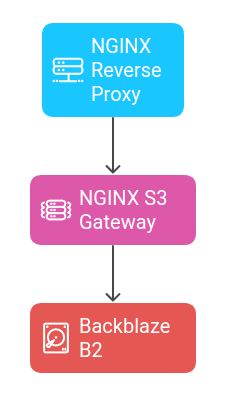

- All my traffic terminates on NGINX reverse Proxy in front (even before this setup). I use the same since I have just one IPv4 at these locations and thus TCP port 80/443 terminates on the NGINX reverse proxy.

- In the backend I am using NGINX S3 Gateway. It can speak to any object storage bucket (over standard S3 protocol) and make the content available over HTTP. It can even pull content out of private S3 buckets.

- The backend/origin of the content sits on Backblaze B2 in Amsterdam. I can always further replicate but for now, I have automated backups of that b2 bucket going into a restic (de-duplicated backups).

- Traffic routing happens at authoritative DNS layer. PowerDNS holds LUA records. Auth looks at source IP of the DNS resolver and replies back with my cache IP nearest to the resolver’s address based on geoip database. It also does basic health check by checking port 443 and stops replying with cache IP where port 443 is not responding.

- Since overall requested objects are pretty small, I have set

PROXY_CACHE_INACTIVE=24hfor NGINX S3 Gateway. It will drop objects only when they have not been requested for atleast 24hrs.

Performance test

So how’s the performance, let’s create a test file of 512MB in size and download it twice.

anurag@desktop ~> dd if=/dev/urandom of=test bs=64M count=8 iflag=fullblock

8+0 records in

8+0 records out

536870912 bytes (537 MB, 512 MiB) copied, 1.45449 s, 369 MB/s

anurag@desktop ~>

Here goes the first download on the CDN server itself which pulls it from the origin. The server is capped at 400Mbps in this case.

anurag@server03 ~> wget -O /dev/null https://cdn.anuragbhatia.com/temp/test

--2025-06-07 03:30:32-- https://cdn.anuragbhatia.com/temp/test

Resolving cdn.anuragbhatia.com (cdn.anuragbhatia.com)... 2400:d321:2191:8556:3::2, 82.180.146.82

Connecting to cdn.anuragbhatia.com (cdn.anuragbhatia.com)|2400:d321:2191:8556:3::2|:443... connected.

HTTP request sent, awaiting response... 200

Length: 536870912 (512M) [application/octet-stream]

Saving to: '/dev/null'

/dev/null 100%[===================================================================================>] 512.00M 20.8MB/s in 27s

2025-06-07 03:31:00 (18.8 MB/s) - '/dev/null' saved [536870912/536870912]

anurag@server03 ~>

Second test (from local cache):

anurag@server03 ~> wget -O /dev/null https://cdn.anuragbhatia.com/temp/test

--2025-06-07 03:32:17-- https://cdn.anuragbhatia.com/temp/test

Resolving cdn.anuragbhatia.com (cdn.anuragbhatia.com)... 2400:d321:2191:8556:3::2, 82.180.146.82

Connecting to cdn.anuragbhatia.com (cdn.anuragbhatia.com)|2400:d321:2191:8556:3::2|:443... connected.

HTTP request sent, awaiting response... 200

Length: 536870912 (512M) [application/octet-stream]

Saving to: '/dev/null'

/dev/null 100%[===================================================================================>] 512.00M 582MB/s in 0.9s

2025-06-07 03:32:17 (582 MB/s) - '/dev/null' saved [536870912/536870912]

anurag@server03 ~>

Note on favicon overwrite by B2

When I set this up, I found Backblaze favicon appearing on top. Somehow when /favicon.ico is asked, Backblaze B2 does HTTP 302 redirect to its own favicon.ico location.

anurag@desktop ~> curl -I https://cdn.anuragbhatia.com/favicon.ico

HTTP/2 302

server: openresty

date: Fri, 06 Jun 2025 22:11:27 GMT

location: https://secure.backblaze.com/bzapp_web_assets/public/pics/favicon.ico

strict-transport-security: max-age=63072000

origin-location: bom03

anurag@desktop ~>

This was strange as I had favicon.ico sitting right in the bucket root. Anyways, to deal with that, I ended up putting favicon.ico at a different location and then doing an overwrite at the NGINX Proxy level.

location = /favicon.ico {

return 302 https://cdn.anuragbhatia.com/web/pages/favicon.ico;

}

This takes care of the issue for now:

anurag@desktop ~> curl -I https://cdn.anuragbhatia.com/favicon.ico

HTTP/2 302

server: openresty

date: Fri, 06 Jun 2025 22:13:45 GMT

content-type: text/html

content-length: 142

location: https://cdn.anuragbhatia.com/web/pages/favicon.ico

strict-transport-security: max-age=63072000;includeSubDomains; preload

anurag@desktop ~>