Analysing Google Cloud routing tiers

Back in 2017, Google Cloud launched a low-performance tier and effectively gave an option to their customers of “cheaper” bandwidth with slightly reduced performance. This page describes their network tiers in detail.

That page says - “Cost optimized: Traffic between the internet and VM instances in your VPC network is routed over the internet in general.”

Background

For a while, I wanted to find out how this works. In network routing this concept is known as “hot potato routing Vs cold potato routing”. When I was doing IP routing workshops for APNIC, I would often give Google, and Facebook as examples of the largest networks with cold potato routing. For those who may not know - the basic idea behind hot potato routing is that imagine a potato is hot and has to be handed off as soon as possible i.e at the nearest egress point while a cold potato is that one can keep (imaginary potato) in hands for long distance and hand off far away. How this is achieved in routing varies a bit, especially for cold potato routing. Normally hot potato routing happens by default because eBGP routes have a lower administrative distance than iBGP routes. E.g for Cisco eBGP administrative distance is 20 while iBGP’s is 200. Thus if you learn a route from the nearest egress network (eBGP peer) and your router in a different location (an iBGP peer), eBGP would be preferred.

Cold potato can be technically achieved by tweaking administrative distance though in production it’s common to see it being achieved using a mix of two techniques:

- Selective peering - Peer with networks where their customers are located - So e.g Google/Facebook/Netflix/Cloudflare etc would typically set BGP sessions with Indian networks like Airtel, Jio etc in India only and will typically avoid BGP sessions in the US, EU etc even when those networks have presence over there.

- DNS and frontend proxy - Use DNS to map the end user’s traffic flow to CDN/caching PoP as near to the user as possible and then control the flow of packets to that edge point in the network. Take e.g Google Mail is not hosted in India but if I try tracing to it from my connection in Haryana, I get:

anurag@desktop ~> mtr -wr mail.google.com

Start: 2023-11-03T04:27:16+0530

HOST: desktop Loss% Snt Last Avg Best Wrst StDev

1.|-- _gateway 0.0% 10 0.2 0.2 0.2 0.2 0.0

2.|-- 10.19.128.1 0.0% 10 8.5 8.1 7.7 8.6 0.3

3.|-- 74.125.147.233 0.0% 10 9.5 9.3 8.5 12.3 1.1

4.|-- 172.253.68.91 0.0% 10 9.5 9.1 8.6 9.5 0.3

5.|-- 142.251.54.87 0.0% 10 9.3 9.6 9.2 10.0 0.3

6.|-- del11s14-in-f5.1e100.net 0.0% 10 8.8 9.1 8.7 9.4 0.3

anurag@desktop ~>

So flow is as: Siti (AS17747) > Google peering (AS15169) > Google frontend proxy in DEL (Delhi)

Now 6th hop is not the ultimate source of email web programs but this is where my TCP session terminates and its internal flow of packets from the actual source (likely Google Singapore) towards this frontend proxy in Delhi. While this is done to optimise, cache static content, have faster TCP handshake and possibly to avoid DDoS burning the whole backbone, this results in very controlled steering of packets. So while it is done from the application design side, the result is cold potato routing i.e. Google Singapore (likely!) > Google Delhi > Siti broadband flow instead of handoff to Siti or upstream of Siti in Singapore.

It’s worth checking my friend Tom Paseka’s talk at AusNOG recently (YouTube link here) on how DNS is now playing a key role in internet routing these days.

Analysing Google Cloud’s routing

Google’s Premium tier / cold potato routing is available across all their regions while the standard tier / hot potato routing option is available in limited regions as listed here. Notably, there is no option for the standard tier in the Delhi or Mumbai regions. I will come to that why this is possibly the case by end of this post (read on!).

Let’s pick two machines somewhere far away to get a better view of routing in the US-West1 region (Oregon, US) one with standard and the other with premium tier. Since tier selection is done at the project level, I created two separate projects and selected one to use the standard tier (premium is the default) and created respective VMs in those projects.

| No. | Name | Tier type | WAN IPv4 | Region | BGP Aggrigate prefix | Origin ASN |

|---|---|---|---|---|---|---|

| 1 | Cold Potato | Premium | 34.105.74.152 | US West 1 | 34.105.64.0/20 | AS396982 |

| 2 | Hot Potato | Standard | 35.212.143.198 | US West 1 | 35.212.128.0/17 | AS15169 and AS19527 |

India > GCP US West - Premium Tier

anurag@desktop ~> mtr 34.105.74.152 -wr

Start: 2023-11-03T05:16:16+0530

HOST: desktop Loss% Snt Last Avg Best Wrst StDev

1.|-- _gateway 0.0% 10 0.2 0.2 0.2 0.2 0.0

2.|-- 10.19.128.1 0.0% 10 10.2 9.9 9.4 10.3 0.3

3.|-- 74.125.147.233 0.0% 10 10.2 10.7 10.2 11.2 0.4

4.|-- 152.74.105.34.bc.googleusercontent.com 0.0% 10 234.5 238.0 234.2 239.8 2.0

anurag@desktop ~>

India > GCP US West - Standard Tier

anurag@desktop ~> mtr 35.212.143.198 -wr

Start: 2023-11-03T05:17:30+0530

HOST: desktop Loss% Snt Last Avg Best Wrst StDev

1.|-- _gateway 0.0% 10 0.2 0.2 0.2 0.2 0.0

2.|-- 10.19.128.1 0.0% 10 12.5 10.5 9.6 12.5 0.9

3.|-- 125.23.236.157 0.0% 10 12.8 14.5 12.4 26.9 4.4

4.|-- 116.119.61.204 0.0% 10 143.1 134.2 128.7 143.1 5.8

5.|-- ix-be-22.ecore4.emrs2-marseille.as6453.net 50.0% 10 123.8 124.3 123.8 124.7 0.4

6.|-- if-be-6-2.ecore2.emrs2-marseille.as6453.net 40.0% 10 279.8 279.8 279.5 280.3 0.3

7.|-- if-ae-25-2.tcore1.ldn-london.as6453.net 90.0% 10 281.0 281.0 281.0 281.0 0.0

8.|-- if-ae-26-2.tcore2.ldn-london.as6453.net 0.0% 10 291.2 291.3 290.5 292.8 0.7

9.|-- if-ae-32-2.tcore3.nto-newyork.as6453.net 90.0% 10 283.3 283.3 283.3 283.3 0.0

10.|-- if-ae-26-2.tcore1.ct8-chicago.as6453.net 10.0% 10 281.1 281.4 280.9 281.9 0.3

11.|-- if-ae-22-2.tcore2.ct8-chicago.as6453.net 0.0% 10 281.3 283.2 280.9 299.7 5.8

12.|-- if-ae-25-2.tcore2.00s-seattle.as6453.net 0.0% 10 281.4 284.1 281.1 308.0 8.4

13.|-- if-ae-2-2.tcore1.00s-seattle.as6453.net 0.0% 10 280.5 282.3 280.2 289.9 2.9

14.|-- 64.86.123.197 0.0% 10 264.5 264.9 264.4 265.7 0.4

15.|-- 198.143.212.35.bc.googleusercontent.com 0.0% 10 269.7 269.9 269.2 271.4 0.6

anurag@desktop ~>

Both pools are routed differently. Google is announcing an aggregate of premium tier in peerings at Delhi but not for the standard tier. For standard route is Siti > Airtel > Tata Comm Marseille, France and then Tata Comm AS6453 is taking it to Seattle for handover to Google. As stated in the table above, the origin ASNs are different for these.

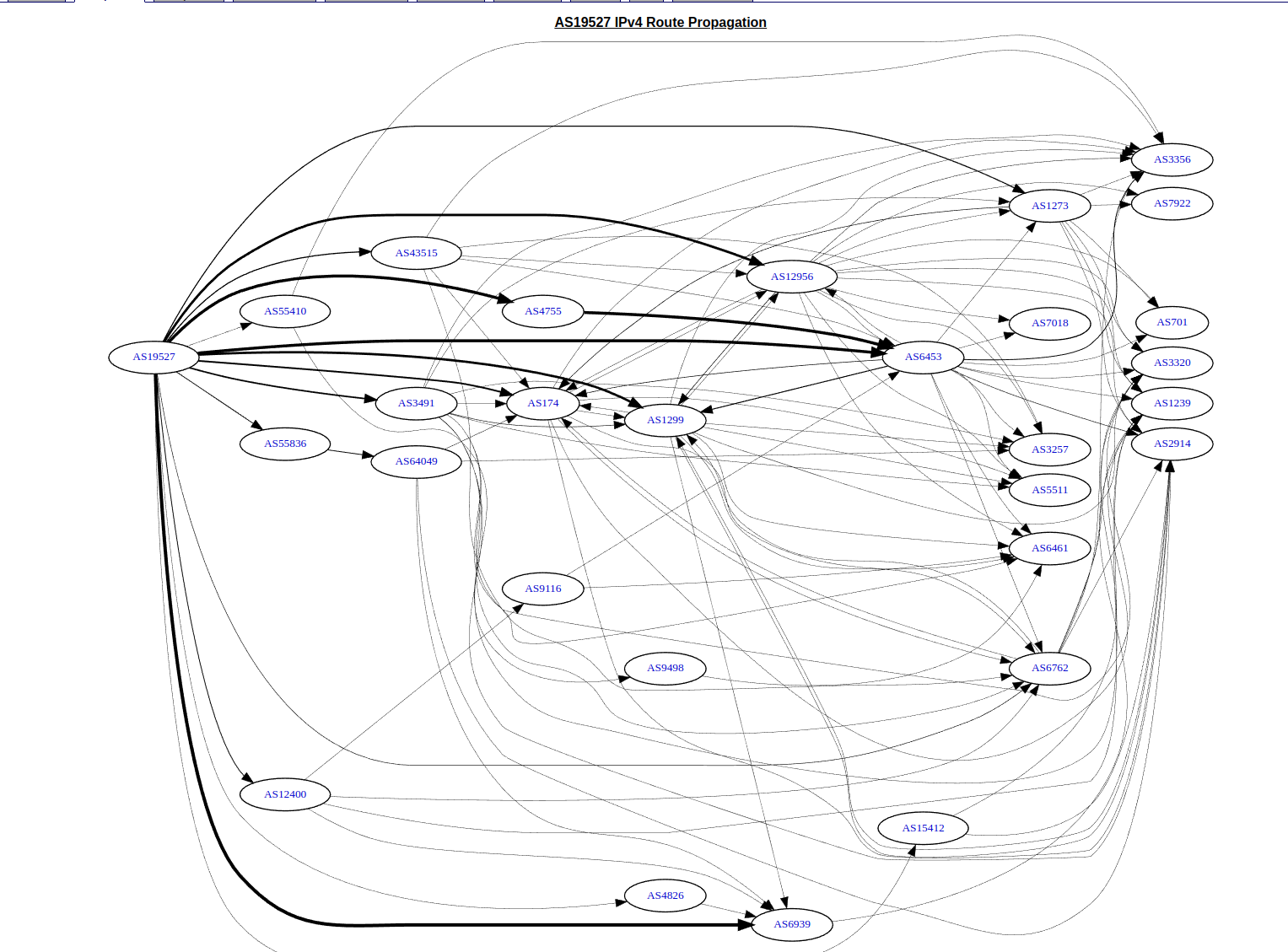

Let’s look at the AS19527 graph from bgp.he.net:

So seems like Google is using Vodafone C&W AS1273, Telefonica AS12956, Tata Comm (AS4755 & AS6453), Arelion (Telia AS1299), PCCW AS3491 etc. These are usually the transits depending on the cloud region. My test case prefix for US-West1 is transiting behind Arelion AS1299, Tata Comm AS6453 only as far as I see. Of course as with the BGP routes, what we see does exist, but there can be more things which exist but we do not see.

Here’s a view of Oregon route views for the prefix:

route-views>sh ip bgp 35.212.128.0/17 long

BGP table version is 188809308, local router ID is 128.223.51.103

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale, m multipath, b backup-path, f RT-Filter,

x best-external, a additional-path, c RIB-compressed,

Origin codes: i - IGP, e - EGP, ? - incomplete

RPKI validation codes: V valid, I invalid, N Not found

Network Next Hop Metric LocPrf Weight Path

V* 35.212.128.0/17 209.124.176.223 0 101 15169 i

V* 194.85.40.15 0 0 3267 1299 19527 i

V* 12.0.1.63 0 7018 6453 19527 i

V* 64.71.137.241 0 6939 15169 i

V* 212.66.96.126 0 20912 3257 15169 i

V* 208.51.134.254 0 0 3549 3356 15169 i

V* 37.139.139.17 0 0 57866 1299 19527 i

V* 91.218.184.60 0 49788 1299 19527 i

V* 193.0.0.56 0 3333 1257 1299 19527 i

V* 114.31.199.16 0 4826 3257 15169 i

V* 203.181.248.195 0 7660 2516 1299 19527 i

V* 4.68.4.46 0 0 3356 15169 i

V* 94.142.247.3 0 0 8283 6453 19527 i

V*> 202.232.0.2 0 2497 15169 i

V* 140.192.8.16 0 20130 6939 15169 i

V* 154.11.12.212 0 0 852 15169 i

V* 206.24.210.80 0 3561 209 3356 15169 i

V* 89.149.178.10 10 0 3257 15169 i

V* 217.192.89.50 0 3303 6453 19527 i

V* 162.250.137.254 0 4901 6079 3257 15169 i

V* 132.198.255.253 0 1351 11164 15169 i

route-views>

From the routing table, I think AS19527 is used when it’s transiting and AS15169 is used when it’s peering. 😀 This is also the reason for the limited availability of the standard tier. Likely it’s available where Google can get one of these carriers and cost-wise I can get Google probably has to pay lower for IP transit as opposed to carrying those bits over its network.

But this is just one side of the picture i.e. Internet > Google. What is more important traffic-wise is Google > Internet as Google is an outbound heavy network. I cannot trace it back to my CGNAT’ed Siti connection. Instead, let’s analyse it from my Mumbai server (host01.bom.anuragbhatia.com - 45.64.190.62) sitting on my friend’s AS132933 network.

GCP US West > India - Premium Tier

me@cold-potato:~$ mtr -wr host01.bom.anuragbhatia.com

Start: 2023-11-03T00:18:57+0000

HOST: cold-potato Loss% Snt Last Avg Best Wrst StDev

1.|-- ??? 100.0 10 0.0 0.0 0.0 0.0 0.0

2.|-- 45.64.190.62.static.charotarbroadband.in 0.0% 10 221.2 221.4 221.2 221.9 0.2

me@cold-potato:~$

GCP US West > India - Standard Tier

me@hot-potato:~$ mtr -wr host01.bom.anuragbhatia.com

Start: 2023-11-03T00:19:05+0000

HOST: hot-potato Loss% Snt Last Avg Best Wrst StDev

1.|-- ??? 100.0 10 0.0 0.0 0.0 0.0 0.0

2.|-- host01.bom.anuragbhatia.com 0.0% 10 237.0 237.2 236.8 237.7 0.3

me@hot-potato:~$

Now this trace is pretty much useless to read because Google’s internal network doesn’t reduce TTL on the hops and it seems like some sort of tunneled vision. Both traces look identical but latency is different likely because of the return path that is my server > Google. I tested for a few other random destinations and routing from Google > Indian IPs seems similar for the standard Vs premium tier. There is always visibly lower latency on the premium tier likely due to a better return path.

Let’s check it for some other country like Germany:

GCP US West > Germany - Premium Tier

me@cold-potato:~$ mtr server7.anuragbhatia.com -wr

Start: 2023-11-03T00:23:13+0000

HOST: cold-potato Loss% Snt Last Avg Best Wrst StDev

1.|-- ??? 100.0 10 0.0 0.0 0.0 0.0 0.0

2.|-- ae1-3121.edge8.Frankfurt1.level3.net 90.0% 10 153.6 153.6 153.6 153.6 0.0

3.|-- GIGA-HOSTIN.edge8.Frankfurt1.Level3.net 0.0% 10 160.9 161.0 160.6 161.7 0.3

4.|-- server7.anuragbhatia.com 0.0% 10 159.3 159.4 159.2 159.8 0.2

me@cold-potato:~$

GCP US West > Germany - Standard Tier

me@hot-potato:~$ mtr server7.anuragbhatia.com -wr

Start: 2023-11-03T00:23:15+0000

HOST: hot-potato Loss% Snt Last Avg Best Wrst StDev

1.|-- ??? 100.0 10 0.0 0.0 0.0 0.0 0.0

2.|-- 72.14.237.48 0.0% 10 7.0 6.7 6.3 7.0 0.2

3.|-- 142.250.167.79 0.0% 10 6.5 6.6 6.1 7.2 0.3

4.|-- ??? 100.0 10 0.0 0.0 0.0 0.0 0.0

5.|-- GIGA-HOSTIN.edge8.Frankfurt1.Level3.net 0.0% 10 171.0 171.4 171.0 173.7 0.8

6.|-- server7.anuragbhatia.com 0.0% 10 170.3 170.4 170.1 171.2 0.4

me@hot-potato:~$

This is different and likely handover to Lumen/Level3 happening at 4th hop within the US.

From hit and trial, I see Google sometimes show different routes on these tiers in the outbound direction and many cases the same path. So while the above example was for Contabo. Here’s an example of both tier’s test to Hetzner:

GCP US West > Germany (Hetzner) - Premium Tier

me@cold-potato:~$ mtr -wr ns1.your-server.de.

Start: 2023-11-03T00:32:03+0000

HOST: cold-potato Loss% Snt Last Avg Best Wrst StDev

1.|-- ??? 100.0 10 0.0 0.0 0.0 0.0 0.0

2.|-- ??? 100.0 10 0.0 0.0 0.0 0.0 0.0

3.|-- core1.fra.hetzner.com 0.0% 10 142.5 142.4 142.1 142.6 0.2

4.|-- core11.nbg1.hetzner.com 0.0% 10 144.8 145.0 144.7 145.3 0.2

5.|-- ns1.your-server.de 0.0% 10 145.4 145.3 145.1 145.5 0.1

me@cold-potato:~$

GCP US West > Germany (Hetzner) - Standard Tier

me@hot-potato:~$ mtr -wr ns1.your-server.de.

Start: 2023-11-03T00:32:06+0000

HOST: hot-potato Loss% Snt Last Avg Best Wrst StDev

1.|-- ??? 100.0 10 0.0 0.0 0.0 0.0 0.0

2.|-- 142.250.169.173 0.0% 10 150.2 154.4 149.4 190.3 12.7

3.|-- core4.fra.hetzner.com 0.0% 10 148.8 148.2 147.8 148.8 0.3

4.|-- core12.nbg1.hetzner.com 0.0% 10 152.5 152.4 152.1 152.9 0.2

5.|-- ns1.your-server.de 0.0% 10 155.2 155.0 154.7 155.3 0.2

me@hot-potato:~$

This again shows the same path and lower latency on the premium due to Hetzner > Google path routing. I am unsure why in this case Google did not do hot potato routing on the standard tier. Maybe it has to do a cost analysis on these specific routes. Also, if you are wondering how there are different forward paths visible within the same backbone - it has to do with their SDN. Essentially Google does not pick one path as the “best path” for a given route but keeps all the routes and these are decided/influenced by the SDN controller. Bikash Koley’s presentation touches on this a bit. But essentially each application can take its outbound path in Google’s design.

With the hope that your cat and dog videos keep using Google’s cold potato routing and reach you with the lowest possible latency, time to end this post!